All gifts are evenly distributed among the relatives, so I got some time to write a new blog. I really wish I could say I got camera calibration done, but unfortunately that’s not the case. However, there is still some interesting stuff going on.

I wanted to have the camera calibration done already, but then an unexpected challenge appeared. In one of my earlier reports I mentioned vignetting is a potential trouble maker. I hoped the problems caused by vignetting would be avoided by the preprocessing I was doing earlier. It turns out I was wrong. I was testing the preprocessing on a randomly picked calibration images, but when I executed the calibration routine on all calibration images provided by the Lytro camera, about a third of them failed miserably. And all of the failures were due to vignetting breaking the lens detection in image corners.

The first though was to remove vignetting but keep the algorithm, but how? The first idea that came to my mind was to use Discrete Fourier Transform(DFT). Partially because I already considered using DFT to find the grid itself as the grid is nicely periodical, which I later declined as too complicated (read: “I don’t remember much so I would have to relearn it”) due to changes in spacing every few lines.

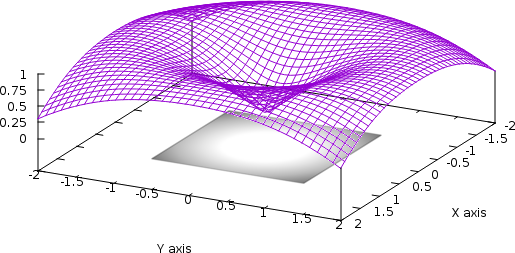

I don’t know why, but quick Google didn’t return any results on the topic of using DFT to remove vignetting. I’m actually quite puzzled by that, as Fourier transform seems like an obvious solution to me. Let me show you a picture:

On the XY axis is an image representing an image with vignetting. On top of it is a plot of function z = sin(sqrt(x^2+y^2)) which is something easily modeled. It can be seen that the vignetting nicely copies the function above. In the frequency domain, we can easily use a function similar to above to remove the vignetting. We just need to figure out what coefficients need to be changed and how.

After a little more experiments I found out something that I never realized before, but which is quite obvious. For the lens preprocessing, I’m interested only in the lenses and not any large-scale objects. So I may as well remove all low frequencies. And it works!

This is not entirely different from the previous approach. Basically what I was doing was to filter all frequencies except the highest ones using an edge detection algorithm. The rest was only cleaning up the result as edge detection. By removing only the lowest frequencies, I avoided the sensitiveness to any little 1-pixel changes.

The removal of very low frequencies works so well, that I could ditch all the complicated preprocessing code described in one of the previous posts and instead change it to a two step process:

- Remove very low frequencies

- Thresholding

To improve the results even more, I tweaked the code a bit so that not only vertical lines, but the horizontal lines, too, are detected using pixels from the image center that is less affected by lens deficiencies.